Summary

Curious about how to summarize the essence of a car model through a single vector derived from customer reviews? This blog dives into a unique application of text analytics, leveraging the power of OpenAI's Text-Davinci-003 for text summarization and Sonoisa SBERT for embedding generation.

We compare how these advanced models work in tandem to create singular vectors for car models, which can be easily searched on our demo site. Not only do we showcase the best results, but we also let you interactively search for car models based on their vectors.

1. Introduction

In today's information-rich era, car reviews are indispensable tools for both potential buyers and manufacturers. They provide deep insights into a vehicle's performance, reliability, and overall satisfaction from firsthand users. Yet, distilling meaningful and actionable features from these text-rich evaluations is a challenging endeavor.

The primary challenge stems from the volume and diversity of reviews. They encapsulate expert analyses, personal stories, and myriad perspectives, each holding unique value. Additionally, translating these evaluations into quantifiable metrics or features to bolster search functions is an intricate process. Add to this the layer of navigating the nuances of the Japanese language, which presents its own set of complexities, especially when many analytical models are primarily tailored for English.

Our mission is straightforward yet ambitious: to harness the richness within these Japanese reviews to enhance car search capabilities. We envision a system where users express their needs and, in response, receive car suggestions that align seamlessly with their specifications. To realize this vision, it's essential to move beyond mere keywords and delve deeper into understanding the sentiment and context of these reviews.

Join us as we navigate this challenge, exploring various methods to transform Japanese user reviews into a powerful tool for enriched car search experiences.

2. Background on Text Analysis in the Context of Search

Text analysis, especially in the realm of search, has evolved significantly with the rapid progress of technology. Here, we'll explore how transforming text into numerical vectors improves search and why car reviews, specifically, are pivotal in this process.

2.1 Introduction to the Concept of Transforming Text into Numerical Vectors

At its core, the process of transforming text into vectors is about converting human language, with all its intricacies, into a format that machines can efficiently process. This transformation enables algorithms to "understand" and "interpret" textual information in a more structured manner, facilitating advanced analytics and search capabilities. For text analysis, especially in search functionalities, transforming textual information into numerical vectors becomes imperative. These vectors serve as fingerprints of the text's content and sentiment, capturing the essence of the information.

For example, the phrase "powerful engine" in a car review might be converted into a vector [0.7, 0.9, -0.2,...], with each number representing a certain linguistic or semantic aspect of the phrase.

2.2 Importance of Car Reviews

- A Wealth of Information: Car reviews stand as a nexus of insights for potential buyers and manufacturers alike. They offer a comprehensive understanding of a vehicle's performance, reliability, and overall user satisfaction.

- Expert and Everyday User Views: Both expert critiques and everyday user feedback coalesce to paint a fuller picture of a car's strengths and areas for improvement.

- Zeroing in on Appeal: Reviews elucidate the often intangible characteristics that make certain cars more appealing than others to different user demographics.

2.3 Semantic Search

- Beyond Keywords: The limitations of keyword-based searches have become increasingly evident. Semantic search dives deeper, aiming to grasp the full meaning behind a query.

- Understanding Semantics: This is not just about what is being said, but how and why. By diving into the meaning and context, semantic search elevates the user experience to new heights.

- Leveraging NLP: Advanced Natural Language Processing techniques, especially Transformer models, have been the linchpin in making semantic search a reality.

- Relevance and Resonance: In this new paradigm, search results are ranked not just by mere occurrence of words but by their semantic relevance and contextual alignment.

- Benefits Galore:

- Precision and Experience: Searches are more accurate, and users find what they're looking for more easily and quickly.

- Decoding Ambiguous Queries: The ability to interpret vague or complex search terms.

- Understanding Intent: Beyond words, the system grasps the intent behind the query, making results more aligned with user expectations.

2.4 Real-world Applications

From powering the algorithms behind today's dominant search engines to refining recommendation systems and streamlining knowledge management processes, the applications of semantic search are manifold and pervasive. Its reach promises to expand as technology continues to advance, signaling a transformative era in how we access and process information.

3. Essential Techniques

3.1 Sentence-BERT (Sonoisa Model) - Efficient Sentence Embeddings

Role and Efficiency of Sentence-BERT: The realm of natural language processing experienced a revolution with the advent of BERT (Bidirectional Encoder Representations from Transformers). However, adapting it for tasks requiring sentence embeddings called for a more optimized model. This is where Sentence-BERT (SBERT) comes in. Introduced by Reimers and Gurevych in 2019, SBERT is a modification of the BERT model to specifically produce sentence-level embeddings. Unlike BERT, which generates token-level embeddings, SBERT streamlines the process, providing faster and more efficient outputs tailor-made for sentences. For our specific applications involving Japanese text, we utilize the Sonoisa model -

sonoisa/sentence-bert-base-ja-mean-tokens-v2- fine-tuned for the language.Model Details: The Sonoisa model is an adaptation of the Sentence-BERT specifically tailored for Japanese text. Below are its specifications:

- Model Name: sonoisa/sentence-bert-base-ja-mean-tokens-v2

- Max Sequence Length: 512 tokens, which is ample for most sentences or paragraphs.

- Output Dimensions: 768, aligning with many modern NLP models' output sizes.

- Suitable Score Functions: cosine-similarity, ideal for measuring semantic similarity in dense vector spaces.

- Size: 443MB, which, given its capabilities, is reasonably compact.

- Origin: This model isn't built from scratch. Instead, it's fine-tuned from cl-tohoku/bert-base-japanese-whole-word-masking.

Distinct Features and Its Edge Over BERT: The original BERT is highly versatile, but when it comes to producing fixed-size sentence embeddings, SBERT is the go-to. The latter achieves this using a Siamese or triplet network structure. Instead of working token by token, it's fine-tuned to generate embeddings for entire sentences at once, making it significantly faster and more adept for tasks like semantic similarity comparisons between sentences.

Applications: SBERT's utility isn't limited to just speed and efficiency. Its capabilities are widely recognized in semantic search, where the goal is to match content based on meaning rather than mere keyword matching. Clustering applications also benefit immensely, as grouping based on semantic content becomes more accurate. In our project, the Sonoisa model plays a pivotal role, encoding text from raw reviews, concatenated reviews, or generated summaries into efficient text embeddings.

3.2 Text Tokenization

Importance and Significance: Before text can be processed by models like SBERT or Davinci Text 3, it must undergo a crucial step called tokenization. In essence, tokenization breaks down raw text into smaller units, called tokens. These tokens, which could be words, phrases, or symbols, are the building blocks that machine learning models interpret.

Prepping Text for Sonoisa and Text-Davinci-003:

The tokenization process varies depending on the model and how it is accessed. For Sonoisa, the aim is to segment the text in a way that is compatible with the model's embedding process. It's essential to use the same tokenizer that was used in training the Sonoisa model for consistent and accurate performance.For Text-Davinci-003, when accessed via OpenAI's API, pre-tokenization on the user's end is not required. The API is designed to handle the raw text and prepare it for the model's processing.

Example:

Consider the text, “The quick brown fox jumped over the lazy dog.”

Post tokenization, we could represent this sentence as a sequence of individual words or even smaller units. In a more advanced setup, these words are further mapped to unique integers, giving something like:

[101, 1996, 4248, 2829, 4415, 1999, 1996, 13336, 2163, 1012, 102].

3.3 K-means Clustering - Grouping Similarities

Introduction and Benefits: K-means clustering is an unsupervised machine learning method designed to segregate data into distinct, non-overlapping clusters. By minimizing the within-cluster variance (often termed as inertia), K-means ensures that data within a cluster are as similar as possible. This ability to group similar pieces of data makes K-means invaluable in scenarios where structured categorization is key.

Application in the Project's Context: In the scope of our car review analysis, K-means plays a pivotal role in grouping reviews based on their semantic content. By doing so, we can identify patterns, extract representative reviews, and consequently enhance the effectiveness of subsequent analytical steps, whether it's encoding with SBERT or summarizing with OpenAI's Davinci model.

4. The Case Study: Japanese Car Reviews

4.1 Data Source

The car reviews used in this case study were sourced from a notable Japanese second-hand car website. This platform is well-regarded among automotive enthusiasts and potential buyers, offering insights into various car models, especially in the second-hand market. The reviews from this site give us a blend of experienced car user insights, capturing both the joys and tribulations of owning different car models.

4.2 Objective

- From Reviews to Vectors: The overarching goal is to engineer an effective method that distills car vector representations from these intricate reviews.

- Integrating with Solr for Dense Vector Search: Solr, a popular search platform, comes into play to house these vectors. By indexing these car vectors, we enable a functionality termed Dense Vector Search. This advanced search capability will ensure that the results are not just based on superficial text matches, but a deeper, semantic alignment with user queries.

- Redefining Search: We seek to enable users to run searches using natural text queries, especially in Japanese—a language rich in nuance and context. We aspire to let users phrase their searches in natural Japanese text, bridging the gap between user intent and search results. For instance:

- Example 1: 家族でキャンプや買い物に行ける車 - This translates to searching for a car suitable for family outings like camping and shopping.

- Example 2: 通勤途中に子供を学校に送れる車 - A search for a car that’s practical enough to drop kids off at school during a commute.

4.3 Directory Structure

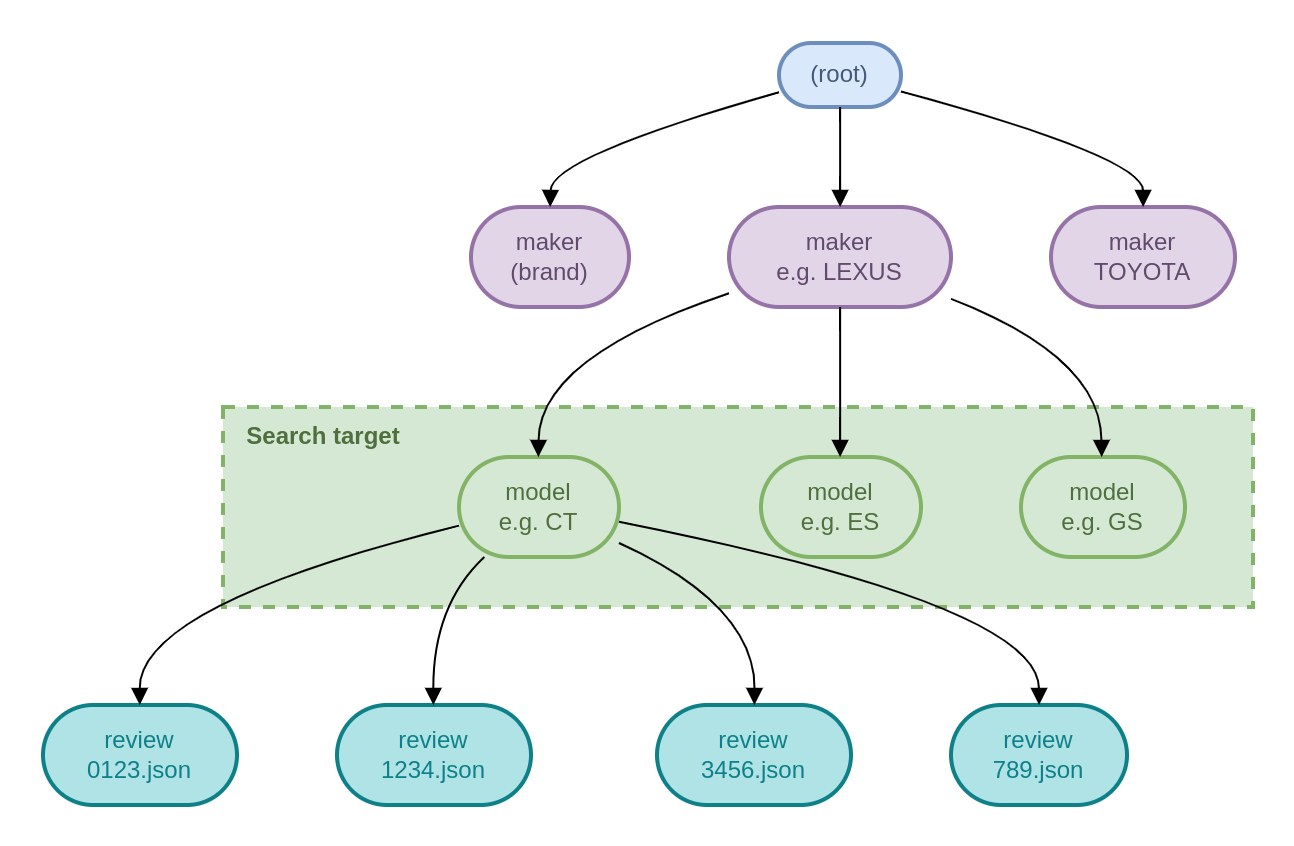

Upon crawling the reviews, the data was systematically organized to facilitate efficient analysis. Below is a representation of the directory structure used for storing the reviews:

Each brand has its dedicated folder, further branching out to individual model folders, which then house the respective reviews.

4.4 User Review Example

To offer a tangible feel of the data we’re handling, here's a JSON snippet representing a typical car review:

{

"title": "ワイルド",

"maker": "レクサス",

"model": "CT",

"eval_text": "【総合評価】 とてもいいと思います! 【良い点】 色がかっこいし綺麗にみえる 【悪い点】 じぶんてきにはおおきさがもっとコンパクトになってほしい",

"eval_comprehensive": " とてもいいと思います! ",

"eval_dislike": " じぶんてきにはおおきさがもっとコンパクトになってほしい",

"eval_like": " 色がかっこいし綺麗にみえる "

}

And here the English translation:

{

"title": "Wild",

"maker": "Lexus",

"model": "CT",

"eval_text": "【Overall Rating】 I think it's very good! 【Pros】 The color looks cool and clean. 【Cons】 Personally, I wish it was more compact.",

"eval_comprehensive": "I think it's very good!",

"eval_dislike": "Personally, I wish it was more compact.",

"eval_like": "The color looks cool and clean."

}

4.5 Data Statistics

Diving into the Numbers:

- Number of Brands: 85

- Number of Models: 1,371

- Total Number of Reviews: 68,441

- Note: While tallying these figures, car models without any reviews were left out of consideration to ensure that our analysis is built on substantial data.

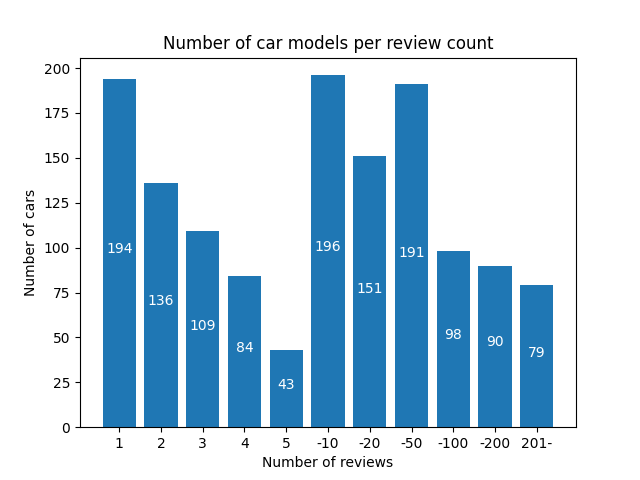

Visual Representation – A Peek into the Dataset’s Distribution:

The following figure provides a graphical representation, showing the number of cars that fall within various review count brackets. This gives insights into how many car models have received a specific range of review counts, highlighting the amount of models that might be widely reviewed or those that have garnered minimal feedback.

5. Method 1: Mean Averaging of Review Car Vectors

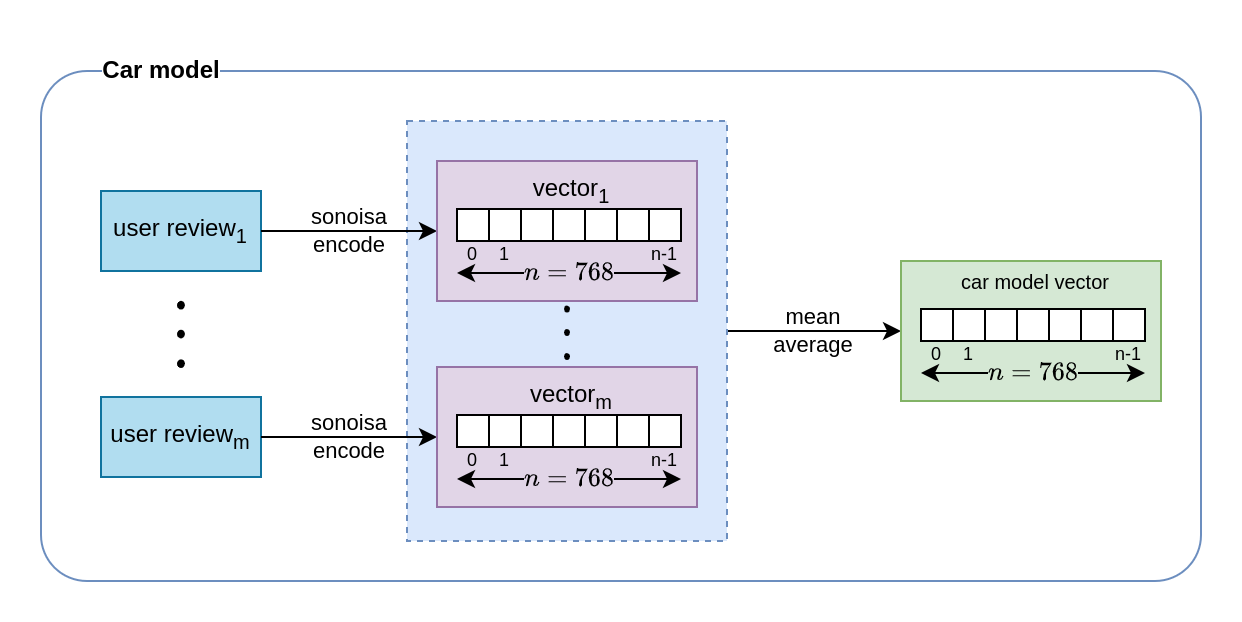

Delving into the methods, our first approach leverages a rather intuitive concept: mean averaging. In the context of user reviews, this involves distilling multiple sentiments and opinions into a singular vector representation.

5.1 Definition

The crux of this method revolves around converting chunks of textual data into numerical vectors. These vectors are essentially condensed forms of text, encapsulating its core sentiment and meaning. When we talk about mean averaging, it pertains to taking an average of these vectors for a set of reviews associated with a specific car model.

5.2 Process

Sentence-BERT model: For each review, the text is first tokenized and then processed through the Sonoisa Sentence-BERT model. The output is a fixed-size vector with 768 dimensions. It's worth mentioning that the model is capable of handling a max sequence length of 512 tokens and uses cosine-similarity as its scoring function.

Averaging Vectors: Once each review is encoded into a vector using SBERT, the next step is aggregation. For every car model, vectors from all its associated reviews are averaged out. This mean vector is then posited to represent the collective sentiment about the car model in question.

5.3 Example

Imagine we have three reviews for a specific car model:

- "Great fuel efficiency but lackluster design."

- "Affordable and efficient but not very stylish."

- "Economical choice for daily commutes, but looks aren't its strong suit."

The Sentence-BERT model would convert each of these reviews into individual vectors. The mean averaging process would then combine these vectors into a single vector representation for this car model, highlighting the recurring theme of fuel efficiency juxtaposed with a potentially unsatisfying design.

5.4 Challenges and Insights

While on the surface, mean averaging seems like an effective way to consolidate multiple reviews, it has its downsides. Especially when faced with a plethora of diverse sentiments, mean averaging can dilute contrasting views.

A glaring pitfall of this method is the risk of blurred distinctions. For instance, if half the reviews praise a car's design while the other half critique it, the mean average might suggest neutrality. In such cases, critical insights could be obscured, leading to potentially misleading results.

This method, while straightforward, provides foundational understanding as we progress to more complex methodologies. The journey through these methods reinforces the layered nature of text analysis, especially within the diverse landscape of car reviews.

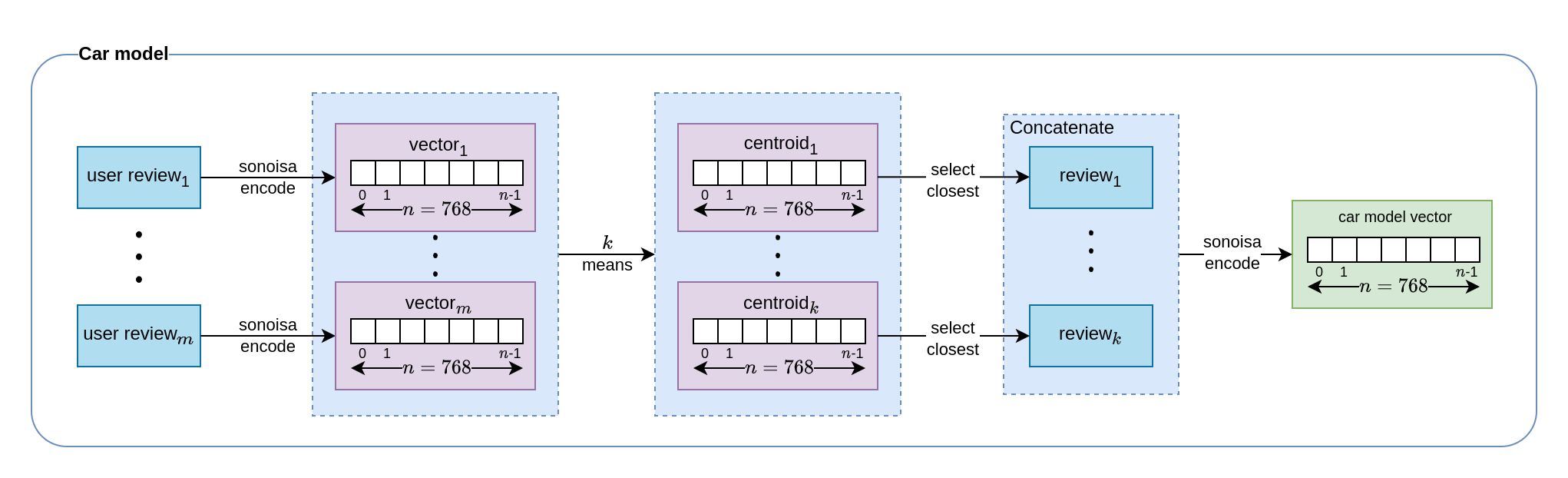

6. Method 2: K-means Clustering and SBERT Model Encoding

As our quest for deriving meaningful car representations continued, we ventured into the territory of clustering, which promised to encapsulate the diversity of reviews while circumventing challenges posed by mean averaging.

6.1 Definition

Clustering in data analysis is the process of grouping data points based on their similarities. For our car reviews, this meant bundling together reviews that conveyed similar sentiments about a car model. This becomes especially pertinent when dealing with vast numbers of reviews, as it provides a compact representation without diluting the essence of the feedback.

6.2 Process

Token Limitation & Strategy

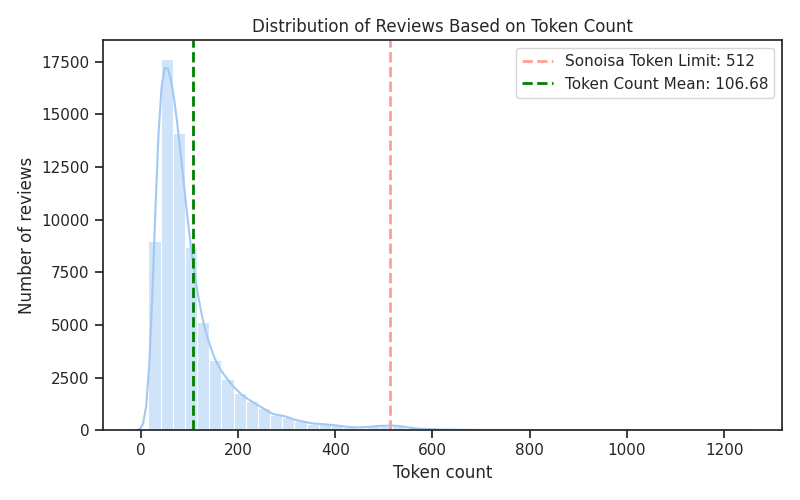

The Sonoisa Sentence-BERT model we use has a 512-token upper limit for each input. Given that the average review contains 106.68 tokens, we had to be cautious in selecting a strategy that maximizes the information without breaching this limit.

Cluster Count Selection: The Role of k

To ensure effective representation within this token limit, we analyzed the optimal number of clusters k for our K-means algorithm. The decision was based on an empirical analysis:

k Value |

Token Limit per Review | Percentage of Reviews Under Token Limit |

|---|---|---|

| 2 | 256 | 93.77% |

| 3 | 170 | 85.39% |

| 4 | 128 | 76.49% |

After careful consideration, k = 3 was selected. It offers a per-review token limit of 170, capturing 85.39% of the reviews. While k = 2 and k = 4 were also considered, they were either too limiting in representational diversity or could not accommodate a significant proportion of reviews, respectively.

K-means Clustering & Encoding

K-means clustering is employed to group reviews based on their semantic content, which we represent through encoded vectors. Once the clusters are formed, we select a review closest to the centroid of each cluster as the most representative. This collection of "centroid reviews" is concatenated and then encoded via Sentence-BERT to generate a unified vector for each car model.

6.3 Example

Let's make this process tangible with a car-centric scenario. Picture a large online forum where car enthusiasts are passionately discussing their favorite car models. Upon a closer look, you notice three prominent categories of discussion: those who are vocal about their love for electric cars, those who swear by the durability of SUVs, and those who are fixated on sports cars for their speed and design.

Using K-means clustering is akin to organizing these forum participants into their respective preference-based groups. Within each of these clusters, we identify the review that is most articulate and reflective of the general sentiment towards that car type—much like spotting the most passionate enthusiast in each group.

This review is considered the "centroid" of the cluster, serving as a snapshot of its collective sentiment. We perform this exercise for each cluster, concatenate these centroid reviews, and encode them through the Sentence-BERT model. This results in a robust and nuanced vector that encapsulates the community's sentiment for each car model.

6.4 Insights

Strengths: One of the pronounced strengths of this method is its ability to capture the richness and diversity of the reviews. By segregating them into clusters, we ensure that varied opinions are acknowledged and represented.

Trade-offs: However, as is the case with many advanced methodologies, there's a trade-off. The depth of analysis achieved comes at the cost of increased computational demands. Particularly when dealing with vast datasets, the clustering process can be resource-intensive.

Challenges with Unpopular Car Models: One issue we noticed is that car models with few reviews may rank higher in our analysis, leading to mismatched results. This is a limitation we need to consider when interpreting the findings.

Filtering for Reliable Results: We found that focusing on car models with at least 20 reviews yields more reliable and relevant results, minimizing the skewing effect of lesser-known models.

While clustering provided a more nuanced method than mean averaging for generating a singular vector that captures user sentiment about various car models, our journey was far from complete. As we'll explore further, even more advanced AI-driven techniques await to refine the way we synthesize these representative vectors from user reviews.

7. Method 3: Leveraging Text Summarization Models for Car Review Condensation

Our quest to synthesize singular vectors that encapsulate the essence of user reviews for different car models led us to experiment with text summarization models. The primary aim was to address the token limitations we've encountered, which restrict the amount of review text we can input.

7.1 Definition

Text summarization is the technology-driven approach to distill extensive textual content into concise yet meaningful summaries. In the scope of our endeavor, it acts as a powerful tool to transform lengthy and varied car reviews into compact, yet sentiment-rich, summaries.

7.2 The Challenge and Our Goals

Challenge: The Sonoisa Sentence-BERT model we employ has a token limitation of 512, which restricts the amount of review text we can use as input without losing crucial information.

Goal: To create a singular vector representation of each car model that effectively encapsulates all reviews without truncation or loss of salient information.

7.3 Proposed Solution

Text Generation Models for Summarization: To tackle the challenge head-on, we explored text generation models such as MT5, and OpenAI's Davinci for summarizing the reviews. These models are skilled at creating summaries that retain the most critical information from the original reviews.

Advantages of Summarization:

- Helps in circumventing the token limitation imposed by Sonoisa SBERT.

- Enables the efficient processing of large volumes of reviews.

- Preserves the essential content, making it more suitable for generating representative vectors.

Workflow:

- Preprocess and tokenize the car reviews.

- Employ text generation models to produce summaries of each review.

- Use Sonoisa SBERT. to generate fixed-size embeddings from these summarized texts.

7.4 Method 3.0: Preliminary Experimentation with the MT5 Japanese Model

Our first foray into text summarization began with the MT5 Japanese Model, mainly due to its free availability.

7.4.1 Model Introduction

- Model Discovered: The model is a fine-tuned version of Google's MT5 (Multilingual T5) model, optimized specifically for Japanese text summarization.

Multilingual Capabilities: This model extends the capabilities of the T5 model and can adapt to a range of NLP tasks like translation, summarization, classification, and question-answering.

Training Data: The model was trained on BBC news articles from the XL-Sum Japanese dataset and is optimized for summarizing texts that incorporate news story elements.

7.4.2 Limitations

Incomplete or Missed Information: While the model performed well in general, it sometimes omitted crucial details from reviews.

- Example: "家の近所に買い物など、短距離のチョイ乗りなら十分役目ははたせますが. プライスに見合った価値はあるし、個性的な車好きには強くお薦め出来るが、日本車的な気軽さを求める人にはお薦めしない。"

- Summary: "日本の車好きにはお薦めしない。"

- Translation: The original text mentions that the car is good for short distances like shopping near home and is recommended for people who like unique cars. However, it is not recommended for those seeking the casual feel of Japanese cars. The summary only captures the last sentiment.

- Example: "家の近所に買い物など、短距離のチョイ乗りなら十分役目ははたせますが. プライスに見合った価値はあるし、個性的な車好きには強くお薦め出来るが、日本車的な気軽さを求める人にはお薦めしない。"

Altered Meaning: In some instances, the meaning of the summarized text diverged from the original review's intent.

- Example: "小回りが良い感じですね、あまり見掛けないですねかわいくて乗り心地もそこそこ。燃費がやたらといいです 軽トラックもありますが同じくらいです。ボディサイズがコンパクトで取り回ししやすく運転しやすかったです。パワーも不足感がなく十分に楽しめました。"

- Summary: "軽トラックで運転しやすかった。"

- Translation: The original text praises the car's maneuverability, fuel efficiency, and sufficient power, among other things. The summary incorrectly suggests that the car is easy to drive, like a light truck, missing other aspects.

- Example: "小回りが良い感じですね、あまり見掛けないですねかわいくて乗り心地もそこそこ。燃費がやたらといいです 軽トラックもありますが同じくらいです。ボディサイズがコンパクトで取り回ししやすく運転しやすかったです。パワーも不足感がなく十分に楽しめました。"

Overlooked Majority Opinions: Sometimes, the model failed to capture the sentiments expressed by the majority of reviewers.

- Example: "やっぱり 古い 。燃費がやたらといいです 軽トラックもありますが同じくらいです 。古いのでかっこがわるいです。"

- Summary: "燃費がやたらと良い。"

- Translation: The original text mentions that the car is old and not very stylish but has good fuel efficiency. Notably, the fact that the car is old is mentioned multiple times in the original review. Despite this repetition, the summary only captures the good fuel efficiency and omits the frequently mentioned age of the car.

- Example: "やっぱり 古い 。燃費がやたらといいです 軽トラックもありますが同じくらいです 。古いのでかっこがわるいです。"

7.4.3 Conclusion

Despite these limitations, the MT5 Japanese summarization model holds promise for capturing the overall sentiment in reviews.

Additional fine-tuning and optimization are needed for the model to produce more reliable summaries in the domain of car reviews.

7.5 Method 3.1: Advanced Text Summarization with OpenAI's Text-Davinci-003 Model

In response to limitations observed in the MT5 model, we turned to OpenAI's Text-Davinci-003 model for superior text summarization capabilities. Part of OpenAI's Codex series, Text-Davinci-003 originates from the GPT-3.5 architecture. It excels in a range of NLP tasks, thanks to its extensive knowledge base and high adaptability. Its notable features include summarization, translation, and question-answering. It also can be fine-tuned for specific applications.

7.5.1 Process

Model Integration: The Text-Davinci-003 model ingests raw reviews and generates concise yet meaningful summaries. Tokenization is not necessary since the API handles this internally.

Output Quality: Despite brevity, the model's summaries maintain the original reviews' essence and sentiment, offering rich data for synthesizing representative vectors.

7.5.2 Start Sequence

A start sequence is essentially a snippet of text that precedes the content you wish to summarize, translate, or analyze in any form. This sequence acts as a directive, informing the model about the type of output expected. For example, a start sequence might be as simple as "Summarize the following:" or as specific as "Identify the positive aspects, negative aspects, and give an overall evaluation."

Purpose and Utility

The point of using a start sequence is to frame the computational context for the model. It:

Shapes the Output: Different start sequences can yield various forms and fidelities of summaries.

Adds Specificity: A well-crafted start sequence can command the model to focus on specific aspects, such as pros, cons, or overall evaluations.

Improves Consistency: A standardized start sequence across multiple reviews ensures that the summaries are generated in a consistent manner, making it easier to compare and contrast them.

Enhances Granularity: The choice of start sequence can influence the level of detail in the summaries, providing either a broad overview or a nuanced interpretation.

Implications for Our Project

In our experiments with Text-Davinci-003 we found that the choice of start sequence had a noticeable impact on the quality and granularity of the generated summaries. By fine-tuning the start sequences used, we could customize the model’s behavior to better suit the needs of our project, be it in generating succinct summaries or in-depth analyses.

Experimentation with Start Sequences

We conducted tests using varied start sequences to measure summary quality.

Case Study: Start Sequence Variation for 3 reviews

We concatenated three car reviews and employed different start sequences to produce varied summaries.

Case study text:

| review 1 | 【総合評価】 おおむね満足です。中古車での購入でしたが元気に走ってくれています。 【良い点】 やっぱり燃費性能は満足です。前車は軽のターボ車で15キロ/L程度でしたが、現在のプリウスは平均で22キロ程度は走ってくれます。(使用エリアは大阪です。) またゆっくり走っている分には、社内も静かです。エルグランドも所有しておりますが、それよりも静かかも・・・ 【悪い点】 高速走行は余裕がないように感じます。アクセルを踏み込めばそれなりに走るんですが、とたんに燃費がダウン。またエンジン音もうるさくなるのでアクセルを踏みたくなくなります。 |

[Comprehensive evaluation] I am generally satisfied. It was a used car purchase, but it is running well. [Good points] After all, the fuel efficiency performance is satisfactory. My previous car was a light turbo car and was about 15 km/L, but the current Prius runs about 22 km on average. (The area of use is Osaka.) Also, the company is quiet because it runs slowly. I also own Elgrand, but it might be quieter than that... [Bad point] I feel that there is no room for high-speed driving. If you step on the accelerator, it will run as it is, but the fuel consumption will suddenly go down. Also, the engine noise becomes louder, so you don't want to step on the accelerator. |

| review 2 | 【総合評価】 確かに燃費はいいが他車種に比べて割り高な感じはします。 周りと同じでいいという方にはおススメですが、走る楽しみは感じられません。 【良い点】 燃費がいい。環境に意識していると周りから思われる。車内が静か。 【悪い点】 信号待ちで、「周りがプリウスだらけ」に遭遇する。 静かすぎて前方の歩行者が気付いてくれない。 |

[Comprehensive evaluation] Fuel efficiency is certainly good, but it feels relatively expensive compared to other models. It is recommended for those who want to be the same as those around them, but it does not give them the pleasure of running. [Good points] Fuel consumption is good. People around you think that you are conscious of the environment. It's quiet inside. [Bad point] While waiting for a signal, I encounter "the surroundings are full of Prius". It's too quiet for pedestrians in front to notice. |

| review 3 | 【総合評価】 老若男女を問わず誰でもすんなり受け入れられるクルマだと思います!使い勝手も従来のセダン、ハッチバックとそん色なく利便性も兼ね備えたクルマです。 【良い点】 燃費は勿論、落ち着いた外観、内装が◎ 【悪い点】 ハイブリッドが故に加速に物足り無さを感じる時があります。 |

[Comprehensive evaluation] I think that it is a car that anyone can easily accept regardless of age or gender! It is a car that is as convenient as a conventional sedan or hatchback in terms of usability. [Good points] Not only is it fuel efficient, but the appearance and interior are also calm. |

Summary tables

Start sequence: "要点を抽出します:"

| ・燃費が良い | ・Good fuel efficiency |

| ・落ち着いた外観 | ・Relaxed appearance |

| ・内装 | ・Interior |

| ・ハイブリッドなので加速が物足り無い | ・Because it is a hybrid, acceleration is unsatisfactory |

Start sequence: = "良い点、悪い点、総合評価を抽出します。"

| 【良い点】 燃費性能、落ち着いた外観、内装、利便性がある。 | [Good points] Fuel efficiency, calm appearance, interior, and convenience. |

| 【悪い点】 高速走行時の加速性能に不満がある。 | [Bad point] I am dissatisfied with the acceleration performance at high speed. |

| 【総合評価】 プリウスは燃費性能が高く、落ち着いた外観、内装、利便性も兼ね備えたクルマです。高速走行時の加速性能に不満がありますが、全体的に満足できるクルマだと思います。 | [Comprehensive evaluation] The Prius is a car that has high fuel efficiency, a calm appearance, interior decoration, and convenience. Although I am dissatisfied with the acceleration performance at high speeds, I think that it is a car that I can be satisfied with overall. |

Case Study: Start Sequence Variation for 18 reviews

We assessed the effectiveness of start sequences using 18 reviews corresponding to the car model Toyota Prius, the review text is omitted for brevity.

Case 1: All-in-One Summary

Obtaining pros, cons and overall rating in one go.

- Concatenated field:

eval_comprehensive - Num tokens: 2,902

- Start sequence: "良い点、悪い点、総合評価を抽出します。"

- Summary response:

| 【良い点】 燃費が良い。ハイブリッドなので優秀です。静か。スタイリッシュなデザイン。 | [Good points] Fuel consumption is good. It's a hybrid, so it's great. quiet. Stylish design. |

| 【悪い点】 少し値段が高い。車内空間が狭い。 | [Bad point] The price is a little high. The space inside the car is small. |

| 【総合評価】 とても良い車です。静かで燃費が良く、デザインもスタイリッシュなので、とても満足しております。 | [Comprehensive evaluation] It is a very good car. I am very satisfied because it is quiet, fuel efficient, and the design is stylish. |

Case 2: Individual aspect summaries

The total tokens amounts to 2,367.

We show in bold the additional information extracted vs Case 1.

Positive Aspects:

- Concatenated field:

eval_like - Num tokens: 655

- Start_sequence: "車のこれまでの良い点をまとめる:"

- Summary response:

| 燃費、静粛性、低燃費、スタイリッシュなデザイン、乗り心地が良い。 | Good fuel efficiency, quietness, low fuel consumption, stylish design, and comfortable ride. |

Negative Aspects:

- Concatenated field:

eval_dislike - Num tokens: 683

- Start_sequence: "車のこれまでの悪い点をまとめる:"

- Summary response:

| ・燃費が実際よりも低い ・広さが狭めな ・静粛性がない ・乗り心地が少々難しい ・値段が少し高め |

・Lower fuel consumption ・ Narrow space ・No quietness ・Slightly difficult to ride ・The price is a little high |

Overall Evaluation:

- Concatenated field:

eval_comprehensive - Num tokens: 1029

- Start_sequence: "これまでの車のレビューをまとめます:"

- Summary response:

| 燃費がとても良く、安定してて、高性能なエンジンで、見た目もスタイリッシュで、乗り心地も良く、エアバッグやABSなどのセーフティー装備も充実しています。 | It has very good fuel economy, is stable, has a high-performance engine, looks stylish, has a comfortable ride, and is equipped with safety equipment such as airbags and ABS. |

Information Loss Analysis

While the start sequence "良い点、悪い点、総合評価を抽出します。" worked well for three concatenated reviews, it yielded overly condensed summaries for a set of 18 reviews.

In Case 1, while the summary does capture some of the essential elements like good fuel efficiency and a stylish design, it is notably less detailed than the summaries in Case 2.

In Case 2, each aspect—pros, cons, and overall rating—is summarized individually, leading to a more granular and nuanced summary. For instance, Case 2's summary for eval_dislike captures not just the high price but also additional points like "No quietness" and "Slightly difficult to ride"

This comparison shows the importance of tailoring the start_sequence for specific needs.

7.5.3 Implementation Strategy

The evolution of our implementation strategy aimed at balancing summary quality with cost-effectiveness.

Initial & Revised Strategies: We initially aimed to summarize 3000-token chunks of concatenated reviews. Due to cost considerations, this approach was later modified.

Start Sequence Customization: We achieved better granularity by altering the start sequence for summary generation, especially when working with larger sets of reviews.

Pricing and Token Estimates: With an estimated total of 16,872,777 tokens in all reviews and a cost of $0.02 per 1k tokens, the initial strategy would have incurred a cost of more than $320 USD.

- Revised Strategy: To mitigate costs, we decided to summarize up to 3000 tokens of concatenated reviews per car model. We also reduced the car model sample size to 458, prioritizing models with more than 20 reviews. This approach aimed to prioritize efficiency while preserving crucial information.

Pipeline:

- K-means Review Selection: For each car model group, we employed K-means clustering to find a group of K reviews that maximizes the number of tokens up to a limit of 3000. The K-means algorithm utilized the

eval_comprehensivefield vector representation to make its selections. - Concatenation and Preparation: Once the reviews were selected, we concatenated the fields

eval_comprehensive,eval_dislike, andeval_likeindividually for each group. This method allowed us to maintain the integrity of each review type. - Tailored Summarizations: We then employed different start sequences tailored for each category of the review (

eval_comprehensive,eval_dislike,eval_like) to generate summaries. For example, the start sequences used were:

| Field | Start sequence |

|---|---|

eval_comprehensive |

これまでの車のレビューをまとめます: (Summarizing the car reviews so far) |

eval_dislike |

車のこれまでの悪い点をまとめる: (Summarizing the negative aspects of the car so far) |

eval_like |

車のこれまでの良い点をまとめる: (Summarizing the positive aspects of the car so far) |

- Final Formatting: These summaries were then concatenated in the format:

"【良い点】\n {eval_like_summary}\n【悪い点】\n{eval_dislike_summary}\n【総合評価】\n{eval_comprehensive_summary}" - Vector Embedding: The Sonoisa model was then used for generating a representative vector from each summary.

7.5.4 How to Use Text-Davinci-003 for Summarization

For those interested in how to use the Text-Davinci-003 model for text summarization, here's a step-by-step guide:

API Key Registration: You will need to sign up for an API key to interact with the model. Go to the section API keys in your User Settings to apply for one.

Library Installation: Install the OpenAI Python Library: You can easily install it using pip.

pip install openaiText Preparation: Ensure the text is well-structured; manual tokenization is unnecessary

Set Up the Completions API Request: The API call parameters must be configured as follows:

- Prompt:

Define the input text to be summarized as aprompt.

Determine an effective start sequence for text summarization. - Model: Specify the

modelastext-davinci-003. - Max Tokens: Set the

max_tokensparameter to limit the length of the summary (e.g., 1000 tokens). - Temperature: Choose the

temperatureparameter (e.g., 0.7) to control the level of randomness in text generation. - Top_P: Configure the

top_pparameter (e.g., 1) to control the sampling of the generated text.

- Prompt:

Make the API Call: Utilize the OpenAI Python library to make the API call with the configured parameters.

The following Python code snippet can be used to generate summaries.

import openai openai.api_key = "your-api-key" text = "Your text here." start_sequence = "Summarize the following:" response = openai.Completion.create( model="text-davinci-003", prompt=f"{text}\n{start_sequence}", temperature=0.7, max_tokens=256, top_p=1, frequency_penalty=0, presence_penalty=0 ) summary = response['choices'][0]['text'].strip() print("Summary:", summary)Result Analysis: Scrutinize the generated summary for content and sentiment to ensure it aligns with the source material. For further details on Text-Davinci-003's potential, refer to the official documentation.

7.5.5 Cost and Efficiency

| Price ($) | Tokens prompt | Tokens generated | Total tokens | Average tokens / review | Average price / review ($) | |

|---|---|---|---|---|---|---|

| Like text summaries | 8.15 | 339735 | 69190 | 408925 | 78.44 | 0.0016 |

| Dislike text summaries | 6.43 | 268873 | 61323 | 330196 | 63.34 | 0.0013 |

| Comprehensive text summaries | 9.38 | 362735 | 97297 | 460032 | 88.25 | 0.0018 |

| Totals | 24.78 | 971343 | 227810 | 1199153 | 230.03 | 0.0047 |

Price Breakdown: The total expenditure for using the Text-Davinci-003 model for summarization came up to $24.78, which covered 5,213 reviews. The average cost per review was approximately $0.0047.

7.5.6 Challenges and Insights

Inconsistency in Formatting: One hurdle was managing varying formats across different summarized reviews, complicating their uniform presentation.

Cost Considerations: Utilizing the Text-Davinci-003 model was financially demanding, costing over $320 for our dataset's summarization. However, the quality of the summaries justifies the expense, if budget is not a constraint.

Start Sequence Adaptations: One insight was that the start sequence significantly impacts summary quality. By testing with different start sequences for different sets of concatenated reviews, we were able to achieve better detail capture in the summaries.

7.5.7 Conclusion

We found that Text-Davinci-003 could produce high-quality summaries. The primary challenges lie in the high costs involved and ensuring formatting consistency across summaries. Nonetheless, if budget constraints are minimal, this model offers promising results.

We invite you to explore our demo site to see these technologies in action. Visit the demo site here.

8. Conclusion

As we journeyed through the intricacies of analyzing Japanese car reviews via three distinct AI-powered methodologies, it became increasingly evident that there's no one-size-fits-all approach. Each method, with its unique facets, contributed to our understanding, but also posed challenges that warrant further exploration.

8.1 Recap of the Methods

Method 1: Mean Averaging of Review Car Vectors provided a straightforward way to condense diverse sentiments into a singular vector representation. However, the simplicity of this method was both its strength and its Achilles' heel, as the averaged vectors often blurred the lines between contrasting opinions.

Method 2: K-means Clustering and SBERT Model Encoding added depth and granularity to the analysis. By clustering reviews and then encoding them, we managed to capture a richer spectrum of sentiments. Yet, this approach demanded more computational resources and introduced complexities associated with clustering.

Method 3: OpenAI Summarization (Text-Davinci-003 Model) showcased the potential of AI-driven text summarization. The Text-Davinci-003 model produced concise yet context-rich summaries, making it easier to distill the essence of lengthy reviews. On the flip side, cost implications and formatting inconsistencies posed hurdles.