Up to this point we have focused on the motivations and design of the Combined Query work, and on how RRF fits into Solr’s wider hybrid search story. For most practitioners, however, the decisive question is not just how the feature is described on paper, but how it behaves under real queries and data. Fortunately, Sonu Sharma’s implementation is publicly available, which makes it possible to test the approach today and build an intuition for how Combined Query and RRF interact in practice. The following sections describe, step by step, how to obtain the branch, build it, and run a local Solr instance so that you can reproduce the experiments and adapt them to your own use cases.

1. Clone the Combined Query branch

The first step is to clone the contributor’s branch directly. The PR is based on the feat_combined_query branch in the ercsonusharma/solr fork:

git clone --branch feat_combined_query --single-branch https://github.com/ercsonusharma/solr.git

cd solr

At this point the working tree contains the Combined Query implementation (CombinedQueryComponent, CombinedQuerySearchHandler, the RRF combiner, etc.), ready to be built and exercised in a local Solr instance.

2. Build a dev distribution

From the Solr root:

./gradlew dev

This produces a self-contained "dev" Solr instance under solr/packaging/build/dev (same layout as a normal binary distro, with bin/solr, server/, configsets/, etc.).

3. Sanity-check: run the PR’s own tests (optional)

The PR ships a bunch of tests specifically for the combined query feature (e.g. CombinedQueryComponentTest, CombinedQuerySearchHandlerTest, CombinedQuerySolrCloudTest, etc.).

From the repo root:

./gradlew :solr:core:test --tests '*CombinedQuery*'

If these pass, you know your environment matches what the author was running.

4. Start Solr from the dev distribution

From the dev distro root:

cd solr/packaging/build/dev

bin/solr start

Optionally verify that Solr is running and that you’re in SolrCloud mode:

bin/solr status

You should see something along these lines:

Solr process 14714 running on port 8983

{

"solr_home":"~/solr/solr/packaging/build/dev/server/solr",

"version":"10.0.0-SNAPSHOT aa0c23ab0cae7f1b498a9864f8324a81cefafe64 [snapshot build, details omitted]",

"startTime":"Wed Nov 19 10:42:15 JST 2025",

"uptime":"0 days, 0 hours, 0 minutes, 13 seconds",

"memory":"227.6 MB (%44.4) of 512 MB",

"cloud":{

"ZooKeeper":"127.0.0.1:9983",

"liveNodes":"1",

"collections":"0"

}

}

The key part is the cloud section: this tells you Solr is running in SolrCloud mode with a local ZooKeeper and a single live node. The combined query / RRF feature is designed with distributed search in mind, so using SolrCloud here matches the intended deployment model.

5. Prepare a configset that uses the combined query handler

Before we can send combined RRF queries to Solr, we need a collection whose configuration knows about the new handler and component that the PR adds:

CombinedQuerySearchHandlerCombinedQueryComponent

The PR already includes a test solrconfig and a test schema that are wired up for this feature:

solr/core/src/test-files/solr/collection1/conf/solrconfig-combined-query.xmlsolr/core/src/test-files/solr/collection1/conf/schema-vector-catchall.xml

Solr’s JUnit tests spin up a mini-Solr using these files, so reusing them is the fastest way to get a local setup that behaves like the tests: the same handler, the same component chain, and a schema that already contains a dense vector field.

5.1 Create a new configset

In your dev distribution, start by cloning the _default configset so you inherit all the usual logging and sane defaults:

cd solr/packaging/build/dev/server/solr

cp -r configsets/_default configsets/combined-query

At this point configsets/combined-query is just a copy of _default.

Next we’ll overwrite its solrconfig.xml with the PR’s test solrconfig, and then prepare a clean schema.xml.

5.2 Reuse and clean up the PR’s test solrconfig

From your source tree where you checked out the PR branch, locate:

solr/core/src/test-files/solr/collection1/conf/solrconfig-combined-query.xml

Then, in the dev distro, copy it into the new configset:

# still in solr/packaging/build/dev/server/solr

cp ~/solr/core/src/test-files/solr/collection1/conf/solrconfig-combined-query.xml \

configsets/combined-query/conf/solrconfig.xml

The test solrconfig is a good starting point, but it contains a couple of test-only components that don’t exist in a normal Solr server:

org.apache.solr.handler.component.ForcedDistributedComponent(wired asfdand used by/tfd)org.apache.solr.handler.component.combine.TestCombiner(wired inside thecombined_querysearch component)

If you reuse the file unchanged, core creation fails with ClassNotFoundException for these classes. So we make two small edits:

Use /combined and remove the test-only distributed component

In solrconfig.xml, change the search handler to something like:

<requestHandler name="/combined"

class="solr.CombinedQuerySearchHandler">

</requestHandler>

Then, update the initParams so they reference /select and /combined, and drop /tfd entirely:

<initParams path="/select,/combined">

<lst name="defaults">

<str name="df">text</str>

</lst>

</initParams>

You can also remove the ForcedDistributedComponent and its dedicated test handler /tfd from the file; they are only used by the test suite to force certain distributed behaviors.

Strip out the test combiner, keep the real RRF combiner

In the original test config, the combined-query search component looks roughly like this:

<searchComponent class="solr.CombinedQueryComponent" name="combined_query">

<int name="maxCombinerQueries">2</int>

<lst name="combiners">

<lst name="test">

<str name="class">org.apache.solr.handler.component.combine.TestCombiner</str>

<int name="var1">30</int>

<str name="var2">test</str>

</lst>

</lst>

</searchComponent>

For our setup, remove the <lst name="combiners">…</lst> block entirely, leaving just:

<searchComponent class="solr.CombinedQueryComponent" name="combined_query">

<int name="maxCombinerQueries">2</int>

</searchComponent>

That’s enough for RRF to work. The real Reciprocal Rank Fusion implementation lives in the server code and is activated via request parameters (for example, combiner=true, combiner.algorithm=rrf, combiner.upTo, combiner.rrf.k, etc.), so we don’t need to wire a custom combiner class in solrconfig.xml just to run standard hybrid RRF queries.

The TestCombiner in the test config is there mainly to:

- Demonstrate that you can plug in different, custom combiners via configuration, and

- Give the test suite a simple, deterministic combiner whose behavior is easy to assert.

For a practical RRF setup, we keep CombinedQueryComponent but drop TestCombiner and friends. After these small edits, the combined-query config works cleanly in a normal dev SolrCloud node and is ready to accept combined keyword + vector RRF requests.

5.3 Create a clean schema.xml and add a vector field

Now, let's prepare a schema and include only the vector bits from the test schema.

First, create schema.xml from _default’s managed schema:

cd solr/packaging/build/dev/server/solr

cp configsets/_default/conf/managed-schema.xml configsets/combined-query/conf/schema.xml

This schema.xml has all the usual text and numeric fields you’d expect from _default.

Next, open schema-vector-catchall.xml from the PR in your editor (we’ll only copy small pieces):

solr/core/src/test-files/solr/collection1/conf/schema-vector-catchall.xml

From that file, copy the dense vector field type and one vector field definition into configsets/combined-query/conf/schema.xml. Concretely, add this to the <fieldType> section:

<fieldType name="knn_vector_cosine"

class="solr.DenseVectorField"

vectorDimension="384"

similarityFunction="cosine"/>

and add this to the <fields> section:

<field name="vector"

type="knn_vector_cosine"

indexed="true"

stored="true"/>

vectorDimensionmust match your embedding size (e.g.,384or768in a real system). For tests they used4, which is enough for PoC purposes.similarityFunctioncontrols how vector similarity is computed (cosine, dot product, euclidean).- The

vectorfield is where you’ll store per-document embeddings and later query withknnorknn_text_to_vectorparsers.

Additionally, since there is no dedicated text field in the schema, we add:

<field name="text"

type="text_general"

indexed="true"

stored="true"

multiValued="true"/>

Now, our schema already has:

- At least one free-text field for keyword search, and

- At least one dense vector field for k-NN search.

That’s all we need to demonstrate a hybrid query: one keyword sub-query + one k-NN sub-query fused via RRF.

6. Create a collection using that configset

The next step is to turn the configset into a real collection we can index documents into and query from.

6.1 Create the hybrid collection

We create a new collection named hybrid, telling Solr to use our combined-query configset:

cd solr/packaging/build/dev

bin/solr create -c hybrid -d server/solr/configsets/combined-query/conf

What this does:

- Registers a collection called

hybridin SolrCloud. - Uses the

combined-queryconfig directory we prepared (PR solrconfig + vector schema). - By default, creates the collection with a single shard and a single replica on your local node.

Once this command completes, we have:

- A live

hybridcollection whosesolrconfig.xmlincludes theCombinedQuerySearchHandlerandCombinedQueryComponent. - A schema that already defines a dense vector field, ready for k-NN + keyword hybrid queries.

7. Index some simple documents

To begin, we will focus only on keyword search and ignore vectors for the moment. Let’s index a small set of example documents into the hybrid collection using a single text field:

curl -X POST "http://localhost:8983/solr/hybrid/update?commit=true" \

-H "Content-Type: application/json" -d '[

{"id":"1", "text":"solr hybrid search with keyword matching"},

{"id":"2", "text":"vector search with knn and dense vectors"},

{"id":"3", "text":"bm25 keyword search for solr"},

{"id":"4", "text":"neural semantic search in apache solr"},

{"id":"5", "text":"hybrid retrieval: combining vector and keyword search"}

]'

At this stage, the text field is the only one we care about; it provides enough content to verify that the combined query handler works correctly with plain keyword-based (BM25) retrieval. We will introduce vector fields and true hybrid (keyword + k-NN) queries later.

8. Run a simple multi-keyword combined query (no vectors yet)

With a few documents indexed into the text field, we can exercise the combined query handler using two keyword sub-queries and fusing their results with Reciprocal Rank Fusion (RRF).

The combined query JSON has three important parts:

- A top-level

queriesmap, where each entry is a sub-query (lucene,edismax,knn, etc.). - A

paramsblock that enables the combiner and configures RRF. - Parameters that tell the component which sub-queries to combine.

For the hybrid collection we created above, start with two simple Lucene queries over the text field and ask Solr to return JSON:

curl -X POST "http://localhost:8983/solr/hybrid/combined?wt=json" \

-H "Content-Type: application/json" -d '{

"queries": {

"q1": { "lucene": { "df": "text", "query": "solr" } },

"q2": { "lucene": { "df": "text", "query": "vector" } }

},

"limit": 10,

"fields": ["id","score", "text"],

"params": {

"combiner": "true",

"combiner.algorithm": "rrf",

"combiner.upTo": "100",

"combiner.rrf.k": "60",

"combiner.query": ["q1","q2"],

"combiner.resultKey": ["q1","q2"]

}

}'

queries.q1andqueries.q2are ordinary Lucene queries with different terms (solrandvector) but the same default field (text).combiner=trueswitches the handler into combined query mode.combiner.algorithm=rrf,combiner.upTo, andcombiner.rrf.kcontrol the RRF fusion behavior.combiner.query=["q1","q2"]andcombiner.resultKey=["q1","q2"]tell the component explicitly which JSON sub-queries to run and combine.

The response is:

{

...

"response":{

"numFound":3,

"start":0,

"maxScore":0.016393442,

"numFoundExact":false,

"docs":[{

"id":"3",

"text":["bm25 keyword search for solr"],

"score":0.016393442

},{

"id":"2",

"text":["vector search with knn and dense vectors"],

"score":0.016393442

},{

"id":"5",

"text":["hybrid retrieval: combining vector and keyword search"],

"score":0.016129032

},{

"id":"1",

"text":["solr hybrid search with keyword matching"],

"score":0.016129032

},{

"id":"4",

"text":["neural semantic search in apache solr"],

"score":0.015873017

}]

}

To understand what the combiner is actually doing, it is useful to compare this fused result with the baseline rankings for q1 and q2 separately. You can run them as normal /select queries:

# Baseline for q1: search for "solr" in text

curl "http://localhost:8983/solr/hybrid/select?wt=json" \

--get \

--data-urlencode "q=solr" \

--data-urlencode "df=text" \

--data-urlencode "rows=10"

Response:

{

...

"response":{

"numFound":3,

"start":0,

"numFoundExact":true,

"docs":[{

"id":"3",

"text":["bm25 keyword search for solr"]

},{

"id":"1",

"text":["solr hybrid search with keyword matching"]

},{

"id":"4",

"text":["neural semantic search in apache solr"]

}]

}

# Baseline for q2: search for "vector" in text

curl "http://localhost:8983/solr/hybrid/select?wt=json" \

--get \

--data-urlencode "q=vector" \

--data-urlencode "df=text" \

--data-urlencode "rows=10"

Response:

{

...

"response":{

"numFound":2,

"start":0,

"numFoundExact":true,

"docs":[{

"id":"2",

"text":["vector search with knn and dense vectors"]

},{

"id":"5",

"text":["hybrid retrieval: combining vector and keyword search"]

}]

}

Comparing the individual /select responses with the /combined response shows how the RRF combiner reorders documents when it merges the two keyword result sets. This gives you a concrete, low-noise way to verify that the combined query handler really is fusing multiple sub-queries, rather than simply returning the results of one.

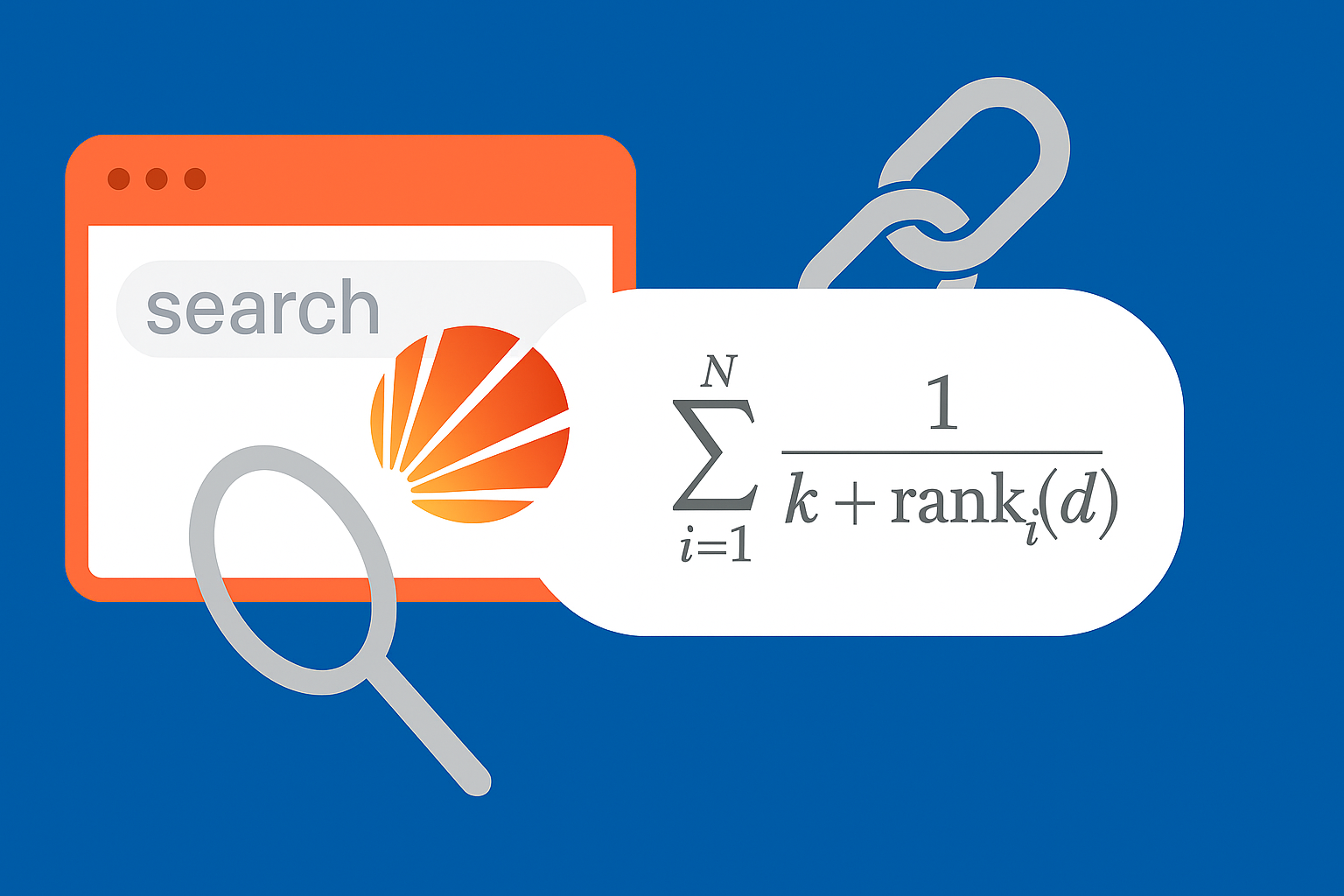

If we compare the three responses, we can see that the combined scores line up with the standard RRF formula:

score(d) = Σ over queries q of 1 / (k + rank_q(d)) with k = 60.

In the q=solr baseline, document id=3 is rank 1, so it gets 1 / (60 + 1) ≈ 0.01639, which matches the combined score 0.016393442. Documents id=1 and id=4 are ranks 2 and 3 for q=solr, so they receive 1 / 62 ≈ 0.01613 and 1 / 63 ≈ 0.01587, matching 0.016129032 and 0.015873017 in the fused result. On the q=vector side, id=2 and id=5 are ranks 1 and 2 and end up with the same RRF values as the corresponding hits from that query (1 / 61 and 1 / 62).

The combined result list is therefore: first all the rank-1 docs from both queries (id=3 and id=2), then all the rank-2 docs (id=5 and id=1), then the rank-3 doc (id=4). That ordering is exactly what you would expect from RRF given these two input rankings.

9. Run an actual hybrid (keyword + vector) combined query

Now let’s move to an example that actually illustrates the benefit of hybrid search.

In this step we’ll switch from minimum examples to a small but structured demo corpus indexed into the hybrid collection.

We created a set of short, single-topic paragraphs that naturally fall into a few clusters:

- Cheap phones / smartphones – docs

1, 2, 3, 4, 6, 15(with5as a near miss):- e.g.: "cheap phones under 200 dollars" buying guide aimed at cost-sensitive users.

Travel to Japan – docs

7and8:- e.g.: Finding cheap flights to Tokyo using alerts and flexible dates.

Remote work and distributed teams – docs

9and10:- These discuss work-from-home guidelines, async communication, and managing teams across time zones.

Search / ranking / IR concepts – docs

11–14:- e.g.: BM25 ranking (term frequency, document length, etc.) , neural retrieval, hybrid search, and why keyword ranking is still a strong baseline.

So the collection is intentionally small, but it contains overlapping semantics and a few "traps" (like cheap flights vs cheap phones) that let hybrid search show its behavior.

For vector search, each document’s text field was embedded with a sentence-transformer model:

- Model:

sentence-transformers/all-MiniLM-L6-v2 - Type: SBERT-style SentenceTransformers model

- Language: English

- Embedding dimension: 384

- Typical use cases: semantic search, clustering, similarity

In code, we encoded all document texts with normalize_embeddings=True (so vectors lie on the unit sphere, which works well with cosine similarity), and stored each resulting 384-dim array into the Solr dense vector field vector.

That gives us a corpus where BM25 mostly reacts to literal phrases like "cheap phones" or "iPhone for sale", while the vector field captures semantic neighbors like "budget smartphones", "affordable mid-range devices", or "used phone offers", and the combined query handler can fuse those two views via RRF.

9.1 keyword-only view: cheap phones

First, a plain BM25 query:

curl "http://localhost:8983/solr/hybrid/select?wt=json" \

--get \

--data-urlencode "q=cheap phones" \

--data-urlencode "df=text" \

--data-urlencode "rows=10" \

--data-urlencode "fl=id,score,text"

In my run, Solr returned:

"docs":[{

"id":"5",

"text":"This report analyzes enterprise mobile strategies. Large companies rarely buy cheap phones; instead they standardize on a small set of secure, well supported devices. Cost matters, but reliability, security patches, and fleet management tools often matter more.",

"score":1.2165878

},{

"id":"1",

"text":"This guide compares cheap phones under 200 dollars. We focus on low cost Android devices that still feel fast for everyday tasks like messaging, web browsing, and social media. If you want the cheapest phone that does not feel completely sluggish, start here.",

"score":1.1558574

},{

"id":"7",

"text":"Looking for cheap flights to Tokyo can be frustrating. Prices fluctuate every day, so this tutorial shows how to track airfare with alerts, flexible dates, and alternative airports to find low cost tickets to Japan without spending hours refreshing search results.",

"score":0.69270647

},{

"id":"6",

"text":"We compare mid range Android phones to top tier flagships. The mid range devices are not the cheapest phones on the market, but they strike a balance between price and performance and are often recommended for people who do not need cutting edge cameras.",

"score":0.6504394

},{

"id":"2",

"text":"Students often look for budget smartphones that can handle online classes, video calls, and note taking apps. This article reviews affordable mid range Android phones that cost less than a typical flagship but still offer decent cameras and battery life.",

"score":0.4867006

},{

"id":"3",

"text":"Flagship phones such as the latest iPhone are incredibly powerful, but they are not exactly inexpensive. We explain why premium devices command a higher price and when it might be worth paying extra for features like advanced cameras and long term software support.",

"score":0.47698247

}]

BM25 does what you’d expect: it leans heavily on literal "cheap" + "phones", and doesn’t know that "budget smartphones" or "affordable devices" are essentially the same concept.

9.2 Vector-only view: semantic "cheap phones"

Next, we embed the phrase "cheap phones" and run a kNN query against the vector field using the {!knn} parser:

# Pseudocode – you’d use your own embedding array here

EMBED='[0.12,0.34,0.56, ... ]' # same dimension as your vector field

curl "http://localhost:8983/solr/hybrid/select?wt=json" \

--data-urlencode 'q={!knn f=vector topK=10}$EMBED' \

--data-urlencode 'fl=id,score,text' \

--data-urlencode 'rows=10'

The top neighbors in my run were:

"docs":[{

"id":"1",

"text":"This guide compares cheap phones under 200 dollars. We focus on low cost Android devices that still feel fast for everyday tasks like messaging, web browsing, and social media. If you want the cheapest phone that does not feel completely sluggish, start here.",

"score":0.8472254

},{

"id":"2",

"text":"Students often look for budget smartphones that can handle online classes, video calls, and note taking apps. This article reviews affordable mid range Android phones that cost less than a typical flagship but still offer decent cameras and battery life.",

"score":0.81867886

},{

"id":"3",

"text":"Flagship phones such as the latest iPhone are incredibly powerful, but they are not exactly inexpensive. We explain why premium devices command a higher price and when it might be worth paying extra for features like advanced cameras and long term software support.",

"score":0.8162396

},{

"id":"6",

"text":"We compare mid range Android phones to top tier flagships. The mid range devices are not the cheapest phones on the market, but they strike a balance between price and performance and are often recommended for people who do not need cutting edge cameras.",

"score":0.77509284

},{

"id":"15",

"text":"Online marketplaces often advertise massive discounts on flagship smartphones, but many of the cheapest listings come from unknown sellers. This article explains how to evaluate used phone offers, avoid scams, and decide when a deal is truly good value.",

"score":0.7708108

},{

"id":"5",

"text":"This report analyzes enterprise mobile strategies. Large companies rarely buy cheap phones; instead they standardize on a small set of secure, well supported devices. Cost matters, but reliability, security patches, and fleet management tools often matter more.",

"score":0.76603323

},{

"id":"4",

"text":"If you want an iPhone for sale at a reasonable price, buying last year s model or a refurbished unit can save a lot of money. This guide lists older iPhones and certified pre owned devices that feel modern but are much more affordable than brand new flagships.",

"score":0.7364917

},{

"id":"7",

"text":"Looking for cheap flights to Tokyo can be frustrating. Prices fluctuate every day, so this tutorial shows how to track airfare with alerts, flexible dates, and alternative airports to find low cost tickets to Japan without spending hours refreshing search results.",

"score":0.6251746

},{

"id":"8",

"text":"Travelers who want affordable airfare deals to Japan should consider flying into Osaka or Nagoya instead of Tokyo. By being flexible with dates and airports, many people manage to book tickets that are hundreds of dollars cheaper than the obvious direct routes.",

"score":0.56306547

},{

"id":"9",

"text":"Many companies are updating their remote work policy. Some now support hybrid work, where employees split their time between home and the office. Others adopt fully distributed teams and rely on detailed work from home guidelines to keep communication smooth.",

"score":0.54597485

}]

Here, id=1 is the clear winner, and several documents that never mention "cheap phones" explicitly still get good scores because they talk about affordable / budget phones.

9.3 Hybrid view: keyword + vector via combined query

Now we ask Solr to run both queries as sub-queries and fuse the two ranked lists using RRF.

The JSON request looks like this:

curl -X POST "http://localhost:8983/solr/hybrid/combined?wt=json" \

-H "Content-Type: application/json" -d '{

"queries": {

"keyword": {

"edismax": {

"qf": "text",

"query": "cheap phones"

}

},

"knn": {

"knn": {

"f": "vector",

"vector": [0.12, 0.34, 0.56, 0.78], // your query embedding here

"k": 10

}

}

},

"limit": 10,

"fields": ["id", "score", "text"],

"params": {

"combiner": "true",

"combiner.algorithm": "rrf",

"combiner.upTo": "10",

"combiner.rrf.k": "60",

"combiner.query": ["keyword", "knn"],

"combiner.resultKey": ["keyword", "knn"]

}

}'

On this tiny dataset, the fused ranking I got was:

"docs":[{

"id":"1",

"text":"This guide compares cheap phones under 200 dollars. We focus on low cost Android devices that still feel fast for everyday tasks like messaging, web browsing, and social media. If you want the cheapest phone that does not feel completely sluggish, start here.",

"score":0.032522473

},{

"id":"5",

"text":"This report analyzes enterprise mobile strategies. Large companies rarely buy cheap phones; instead they standardize on a small set of secure, well supported devices. Cost matters, but reliability, security patches, and fleet management tools often matter more.",

"score":0.031544957

},{

"id":"2",

"text":"Students often look for budget smartphones that can handle online classes, video calls, and note taking apps. This article reviews affordable mid range Android phones that cost less than a typical flagship but still offer decent cameras and battery life.",

"score":0.031513646

},{

"id":"6",

"text":"We compare mid range Android phones to top tier flagships. The mid range devices are not the cheapest phones on the market, but they strike a balance between price and performance and are often recommended for people who do not need cutting edge cameras.",

"score":0.03125

},{

"id":"3",

"text":"Flagship phones such as the latest iPhone are incredibly powerful, but they are not exactly inexpensive. We explain why premium devices command a higher price and when it might be worth paying extra for features like advanced cameras and long term software support.",

"score":0.031024532

},{

"id":"7",

"text":"Looking for cheap flights to Tokyo can be frustrating. Prices fluctuate every day, so this tutorial shows how to track airfare with alerts, flexible dates, and alternative airports to find low cost tickets to Japan without spending hours refreshing search results.",

"score":0.0305789

},{

"id":"15",

"text":"Online marketplaces often advertise massive discounts on flagship smartphones, but many of the cheapest listings come from unknown sellers. This article explains how to evaluate used phone offers, avoid scams, and decide when a deal is truly good value.",

"score":0.015384615

},{

"id":"4",

"text":"If you want an iPhone for sale at a reasonable price, buying last year s model or a refurbished unit can save a lot of money. This guide lists older iPhones and certified pre owned devices that feel modern but are much more affordable than brand new flagships.",

"score":0.014925373

},{

"id":"8",

"text":"Travelers who want affordable airfare deals to Japan should consider flying into Osaka or Nagoya instead of Tokyo. By being flexible with dates and airports, many people manage to book tickets that are hundreds of dollars cheaper than the obvious direct routes.",

"score":0.014492754

},{

"id":"9",

"text":"Many companies are updating their remote work policy. Some now support hybrid work, where employees split their time between home and the office. Others adopt fully distributed teams and rely on detailed work from home guidelines to keep communication smooth.",

"score":0.014285714

}]

The key point is how the top few positions behave:

id=1ranks #2 in keyword and #1 in vector, so RRF gives it the highest combined score.id=2is #5 in keyword but #2 in vector, so it becomes a strong #2 overall.id=5is #1 in keyword but only #6 in vector, so it lands at #3 overall.

RRF only cares about ranks, not raw scores. Documents that are consistently good in both lists (like id=1) beat documents that are excellent in only one of them (like id=2 or id=5). That’s exactly the kind of behavior we want from a hybrid system: the final ranking blends keyword precision and semantic recall, instead of letting either one completely dominate.

10. Inspect debug

Once you have the hybrid query working, it’s worth looking at how the RRF combiner arrived at its scores. You can do this simply by adding the usual debug parameters to the same /combined request:

debugQuery=ondebug=results

In the JSON response, Solr adds a debug section. For combined queries, that section includes a combinerExplanations map that spells out exactly how each RRF score was computed from the per-query ranks.

Here is an excerpt from one of my runs with the "cheap phones" hybrid query (keyword + kNN):

"combinerExplanations":{

"1":"org.apache.lucene.search.Explanation:0.032522473 = 1/(60+1) + 1/(60+2) because its ranks were: 1 for query(keyword), 2 for query(knn)\n",

"5":"org.apache.lucene.search.Explanation:0.031544957 = 1/(60+6) + 1/(60+1) because its ranks were: 6 for query(keyword), 1 for query(knn)\n",

"2":"org.apache.lucene.search.Explanation:0.031513646 = 1/(60+2) + 1/(60+5) because its ranks were: 2 for query(keyword), 5 for query(knn)\n",

"6":"org.apache.lucene.search.Explanation:0.03125 = 1/(60+4) + 1/(60+4) because its ranks were: 4 for query(keyword), 4 for query(knn)\n",

"3":"org.apache.lucene.search.Explanation:0.031024532 = 1/(60+3) + 1/(60+6) because its ranks were: 3 for query(keyword), 6 for query(knn)\n",

"7":"org.apache.lucene.search.Explanation:0.0305789 = 1/(60+8) + 1/(60+3) because its ranks were: 8 for query(keyword), 3 for query(knn)\n",

"15":"org.apache.lucene.search.Explanation:0.015384615 = 1/(60+5) because its ranks were: 5 for query(keyword), not in the results for query(knn)\n",

"4":"org.apache.lucene.search.Explanation:0.014925373 = 1/(60+7) because its ranks were: 7 for query(keyword), not in the results for query(knn)\n",

"8":"org.apache.lucene.search.Explanation:0.014492754 = 1/(60+9) because its ranks were: 9 for query(keyword), not in the results for query(knn)\n",

"9":"org.apache.lucene.search.Explanation:0.014285714 = 1/(60+10) because its ranks were: 10 for query(keyword), not in the results for query(knn)\n"

}

A few things to notice:

- The keys (

"1","5","2", …) are the document IDs. - Each value is a Lucene

Explanationstring showing the RRF formula:- With

k = 60, a doc that’s rank 1 inkeywordand rank 2 inknngetsscore = 1/(60+1) + 1/(60+2) ≈ 0.03252. - If a doc appears in only one list (e.g.

keywordbut notknn), it gets a single term like1/(60+5).

- With

- The tail text ("because its ranks were: 1 for query(keyword), 2 for query(knn)") tells you exactly which rank it had in each sub-query’s result list.

Reading this section, you can verify:

- Which sub-queries contributed to each document.

- How much each rank position contributes under RRF.

- Why certain "bridge" documents (strong in both lists) surface at the top of the hybrid ranking.

For debugging or writing about the feature, this combinerExplanations block is the clearest proof that the PR is doing true RRF over per-query rankings, not just re-sorting by one underlying score.

Taken together, these experiments show that Sonu Sharma’s Combined Query + RRF work is not just an interesting design on paper, but a concrete, working path to practical hybrid search in Solr: we cloned the branch, wired up a clean configset and schema, verified that the new handler accepts JSON queries, and walked from simple multi-keyword fusion all the way to a genuinely hybrid scenario where BM25 and dense vectors each bring different strengths and the RRF combiner balances them in a transparent, explainable way.

The combinerExplanations output in particular makes it very clear that the implementation is doing real rank fusion over multiple result lists, not a cosmetic re-sorting, and the “cheap phones” corpus gives an intuitive feel for how lexical precision and semantic recall interact. Our impression so far is that this is a promising foundation for production-ready hybrid search in Solr, and it’s well worth the time for Solr users and contributors to pull the branch, run their own workloads, and help push the feature toward inclusion in a future release.